There are situations when we have to run the select queries on MySQL databases and redirect the output in .cav format. This is required when we have to share the data with the developer or send it to some ETL job for external tables. In addition, if you are running MySQL on AWS RDS you get limited options to get the MySQL query output to CSV. As an alternative, you can use the AWS S3 bucket but again it’s a complex and cost-involved way of getting MySQL query output to CSV. I am going to show you an easy alternative that will work on a normal Linux command prompt. Using this you can fetch a long SQL output in CSV from MySQL RDS or from on promises MySQL Database instance.

Step 1: log in to any jump host or bastion host

Step 2: if your SQL output is going to take a long time use screen

Step 3 Run MySQL export batch command and sed Linux command

mysql -A -h testdb01.us-east-1.rds.amazonaws.com -u root -p --batch -e "select * from testdb01.table_x where date_created >='2021-07-01 00:00:00' AND LENGTH(emp_id) < 30 order by date_created;" | sed 's/\t/","/g;s/^/"/;s/$/"/;s/\n//g' > mysq2csv_output.csv

Step 4: once the command is completed you will see the output in .csv under the file mysq2csv_output.csv

Hope you like the article

References: https://dev.mysql.com/doc/refman/8.0/en/select-into.html

Read More

Take MySQL backup From Jenkins Job

How to fix ORA-28368: cannot auto-create wallet

AWS Services and their Azure alternatives

How to Manage AWS S3 Bucket with AWS CLI

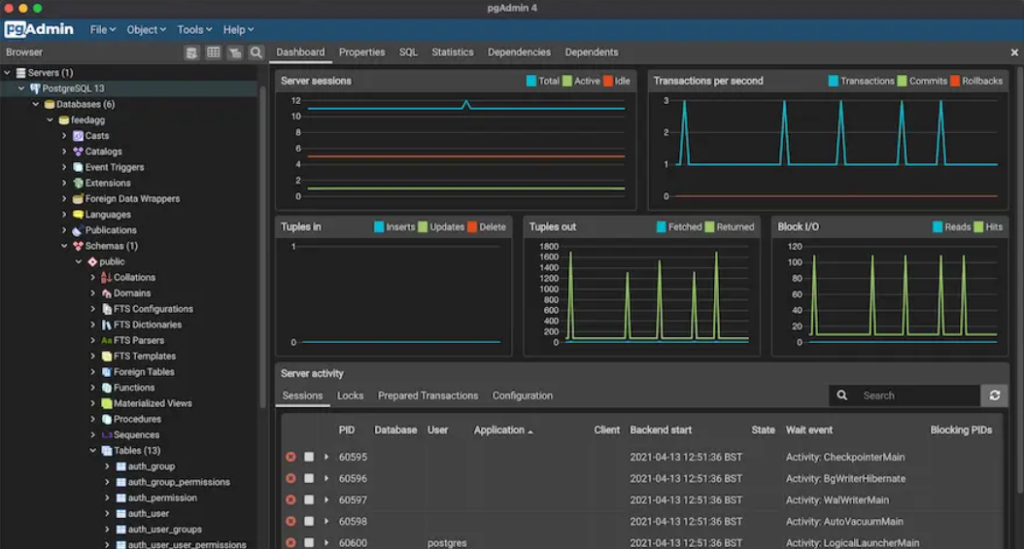

How to connect PostgreSQL Database from PgAdmin

How to create AWS rds PostgreSQL Database

Convert pem to ppk

How to Install PuTTy on Window

How to Install and create AWS EC2 Instance using Terraform

AWS MySQL RDS Database Creation using AWSCLI

How to Create MySQL Database with AWS RDS

How to connect to AWS MySQL / MariaDB RDS or EC2 database from MySQL WorkBench