Why AWS?

Cloud is becoming a vital part of Database Administration because it provides various database services & Infrastructure to run Database Ecosystems instantly. AWS (Amazon Web Services) is one of the pioneers in the Cloud according to the Gartner magic quadrant. Knowing more cloud infrastructure technologies is going to give more mileage to your Administrator career. In this article, you will find some of the AWS services which Database Administrators should know as they are basic to run Database opration.

Essential AWS Services List For Database Administrator (DBA)

Network

VPCs

Subnets

Elastic IPs

Internet Gateways

Network ACLs

Route Tables

Security Groups

Private Subnets

Public Subnets

AWS Direct Connect

Virtual Machine

EC2

AWS Work Space

Storage

EBS

EFS

S3

Database as Services (RDS)

MySQL / MariaDB

PostgreSQL

Oracle

Micrsoft SQL Server

AWS Aurora PostgreSQL/MySQL

Database Managed Services

AWS Dynamo DB

AWS Elasticsearch

Amazon DocumentDB

Messaging & Event Base Processing

Apache Kafka (Amazon MSK)

Warehousing/ OLAP /Analytics Stagging DB

AWS Redshift

Monitoring

Cloud watch

Amazon Grafana

Amazon Prometheus

Email Service

Amazon Simple Notification Service

Security

IAM

Secrets Manager

Database Task Automation

AWS Batch

AWS Lambda

Cloud Formation

Command-line interface (CLI) to Manage AWS Services

AWSCLI

Migration

Database Migration Service

Budget

AWS Cost Explorer

AWS Budgets

Some other Services & Combination worth of Exploring

Bastion Host For DBA

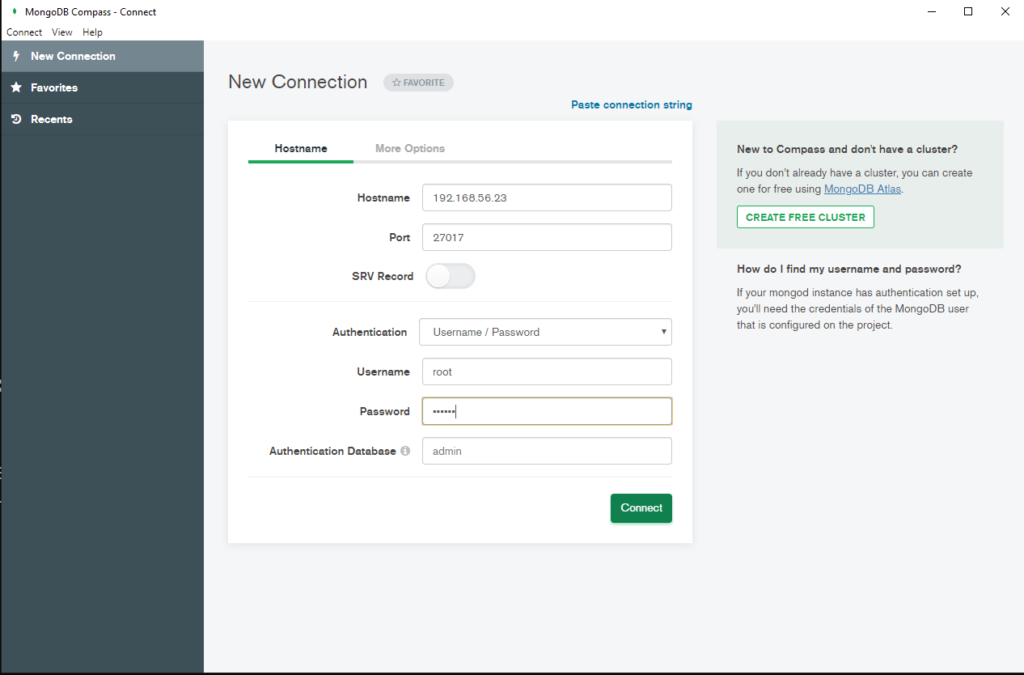

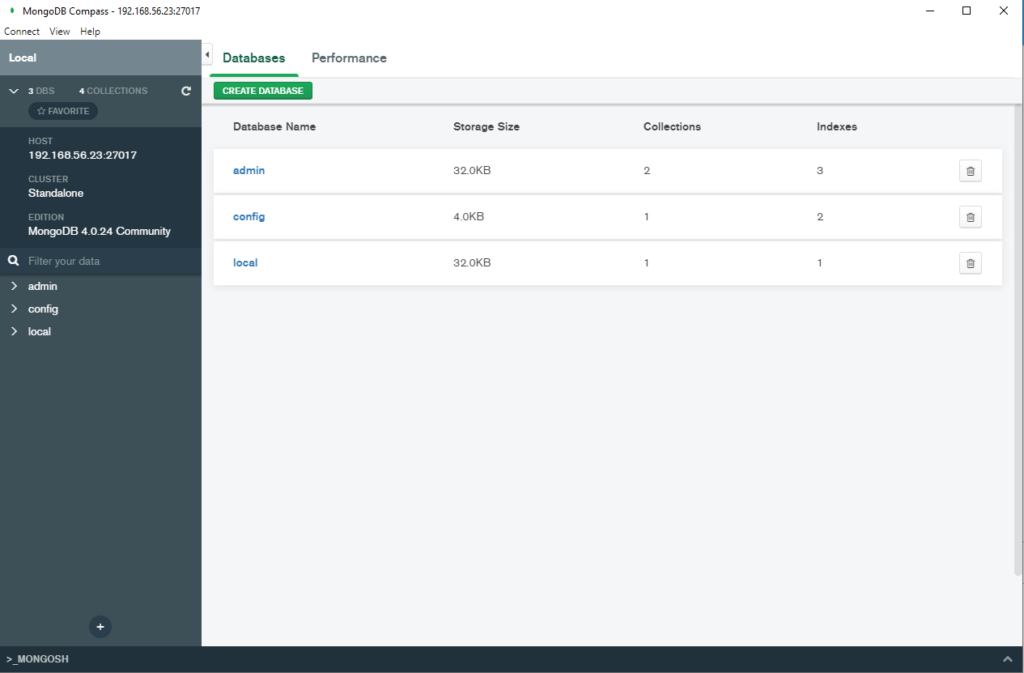

MongoDB running on EC2

ELK (Elastic Search , LogStach, Kibana) running on EC2

Tunnels for Non stranded ports for Database Connections for more security

pg_pool or pg_Bouncer for PostgreSQL Databases

Stay Tuned For Latest Database, Cloud & Technology Trends

Read More >>

How to Fix Pg_ctl command not found

Sometimes after a fresh PostgreSQL install if we want to

HA Proxy For MySQL Master – Slave

There are scenarios where we have to provide the high

How to Rebuild MongoDB Replica-Set Node Fast in Few Minutes

Sometimes it happens that the MongoDB replica set node goes

Free Database Client For PostGresql

1. pgAdmin Single pack with Database client, multiple Database Administration

Essential AWS Services for Database Administrators to Learn

Why AWS? Cloud is becoming a vital part of Database

Running MongoDB on Docker Compose

In this article, we will discuss how DBA can run

How to Fix Cannot open your terminal ‘/dev/pts/2’ – please check

Normally we see a common error when we switch or

How to fix Rocky Linux full screen issue on Oracle Virtual Box

In this article, we will fix the rocky Linux full-screen

MySQL query output to csv

There are situations when we have to run the select

Take MySQL backup From Jenkins Job

Take MySQL Database Backup From Jenkins In this post, we

Apache Tomcat: java.net.BindException: Permission denied (Bind failed) :443

How to fix error: Apache tomcat : java.net.BindException: Permission denied

How to Configure Oracle Transparent Data Encryption (TDE) on Standby Database

How to Configure Oracle Transparent Data Encryption (TDE) on Standby

How to fix ORA-28368: cannot auto-create wallet

How to fix ORA-28368: cannot auto-create wallet While starting the

AWS Services and their Azure alternatives

AWS vs Azure Service Names In this article, I have

How to Manage AWS S3 Bucket with AWS CLI

How to Manage AWS S3 Bucket with AWS CLI (Command

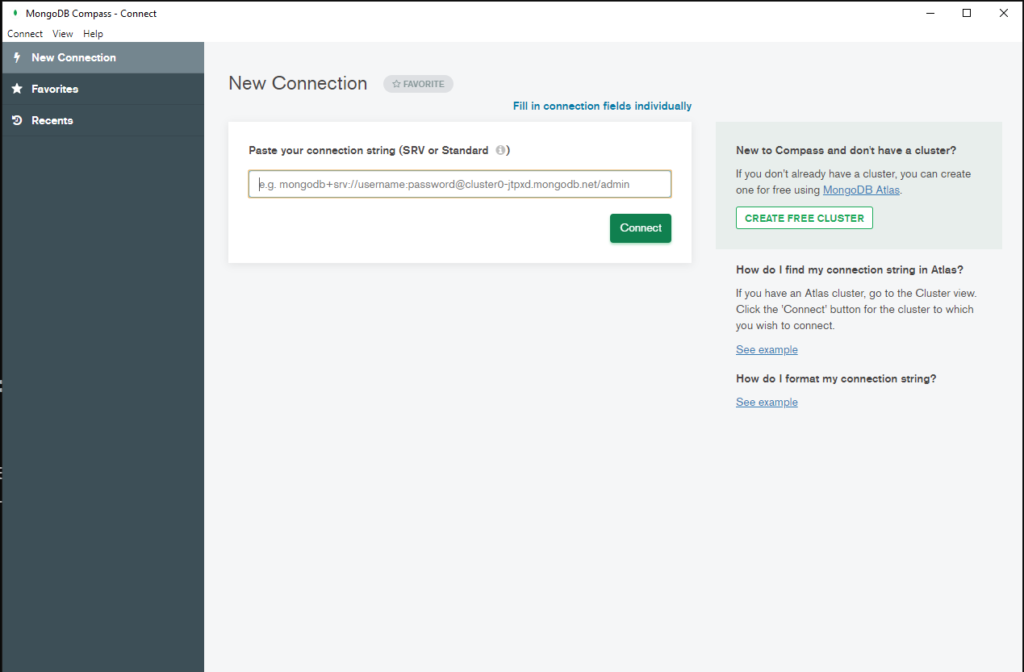

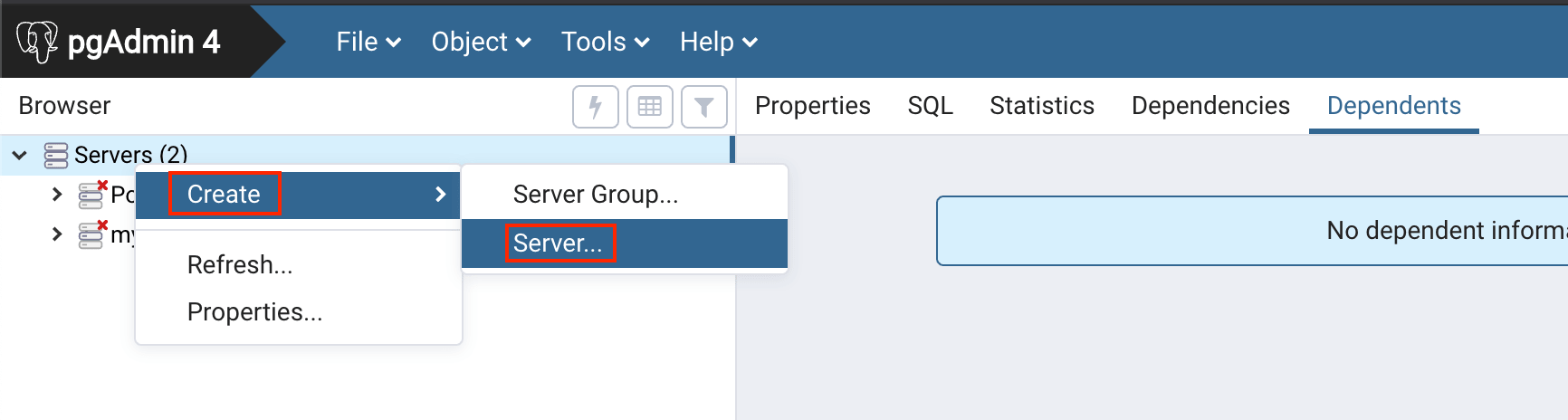

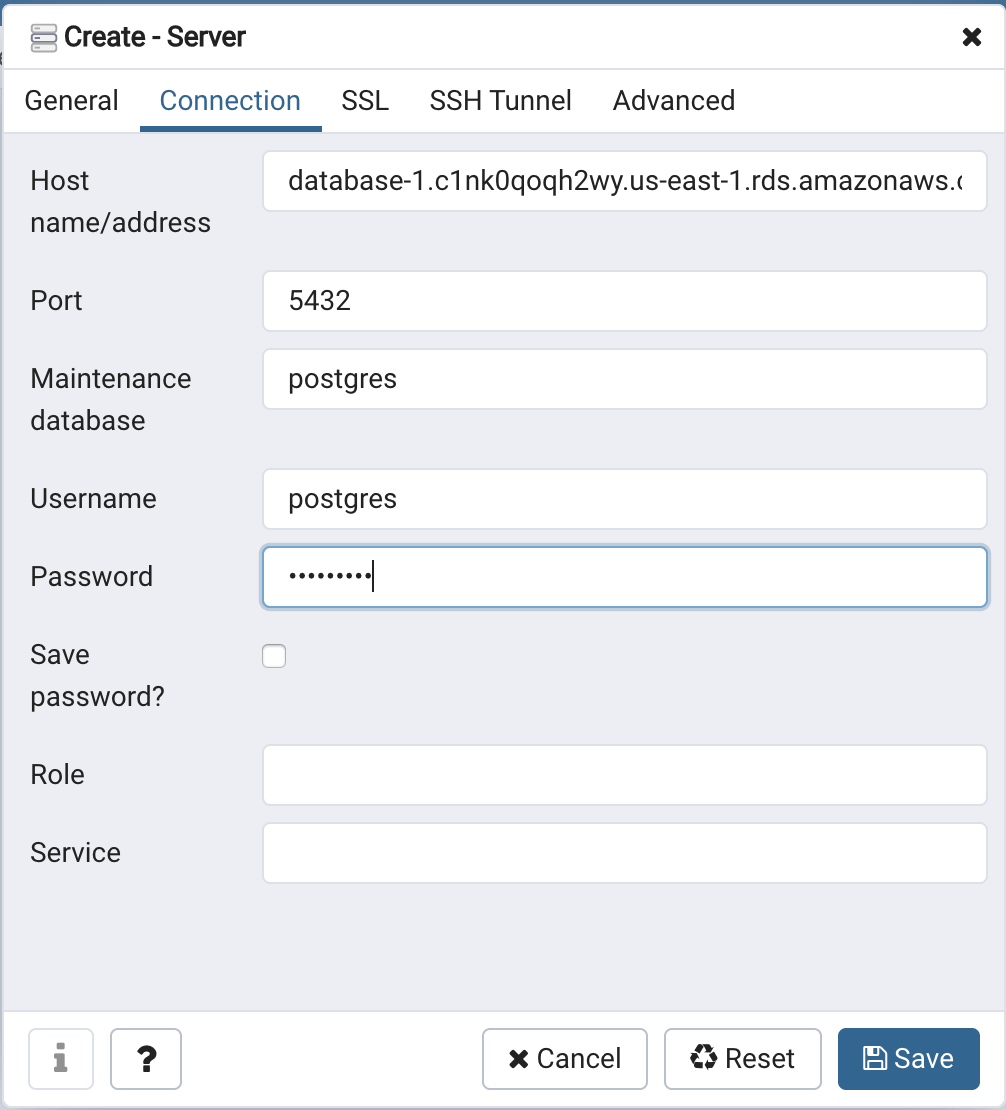

How to connect PostgreSQL Database from PgAdmin

How to connect PostgreSQL Database from PgAdmin In this article,

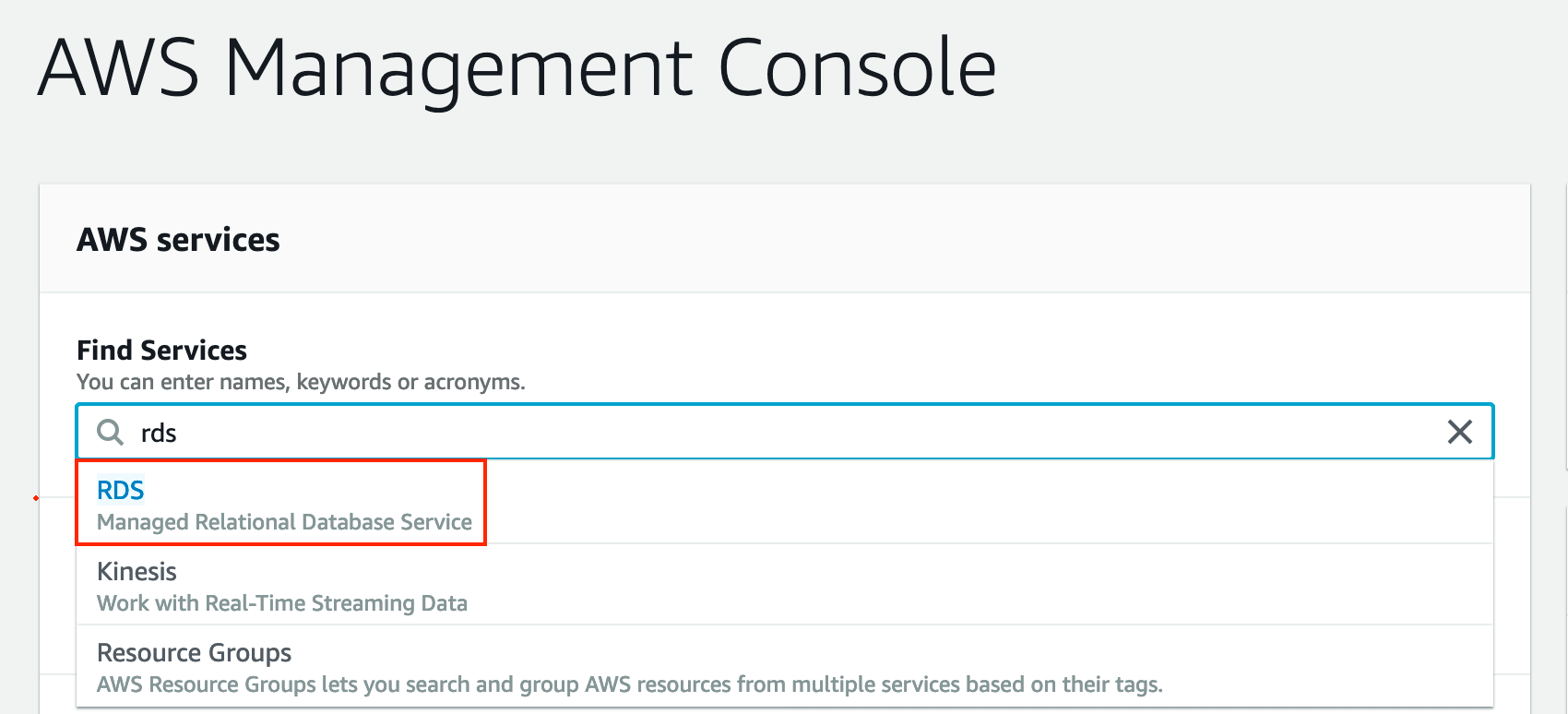

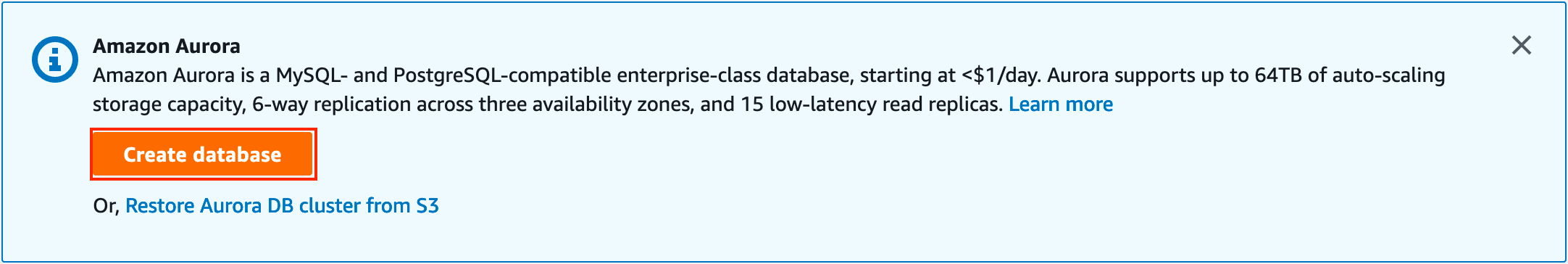

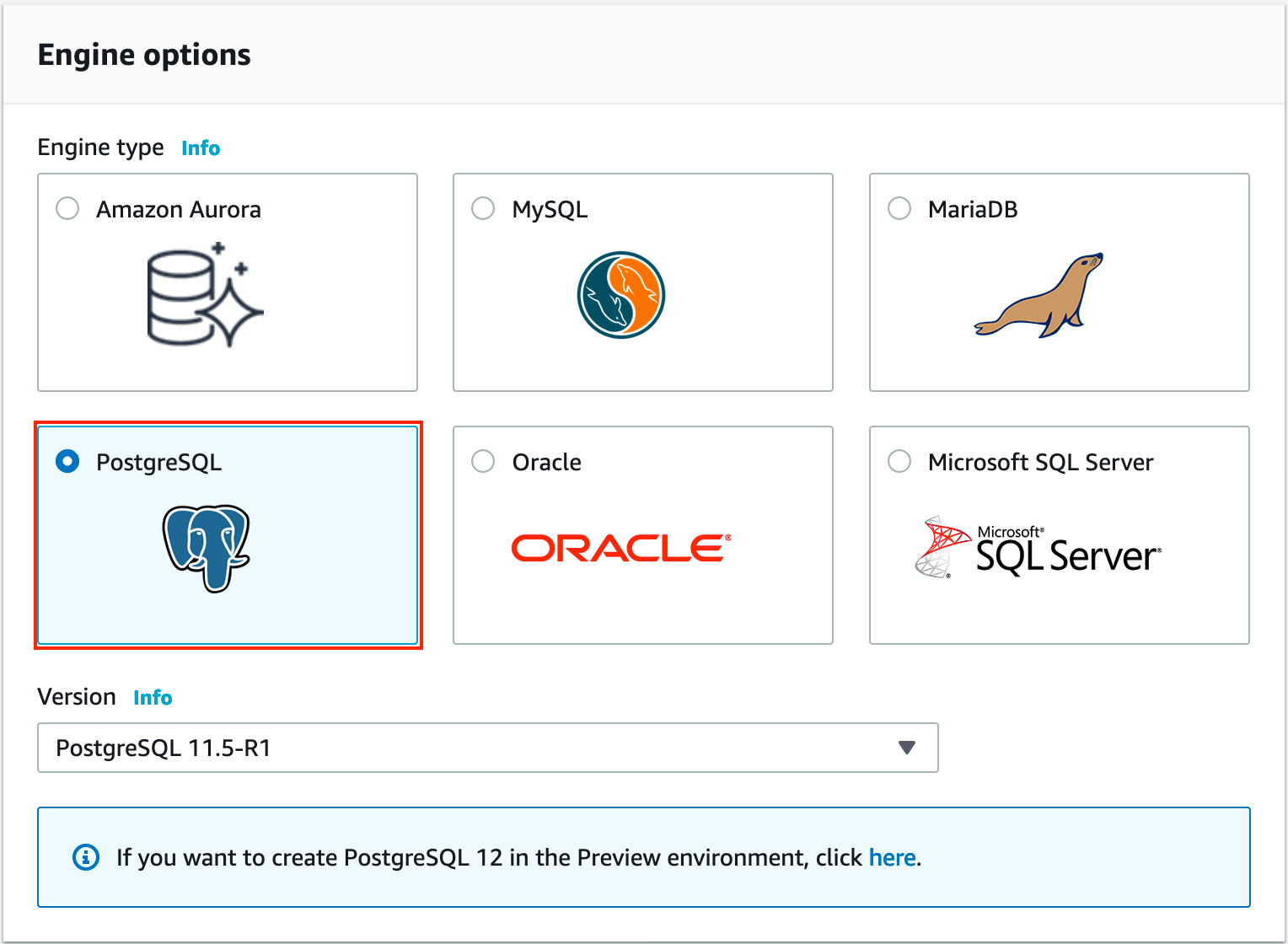

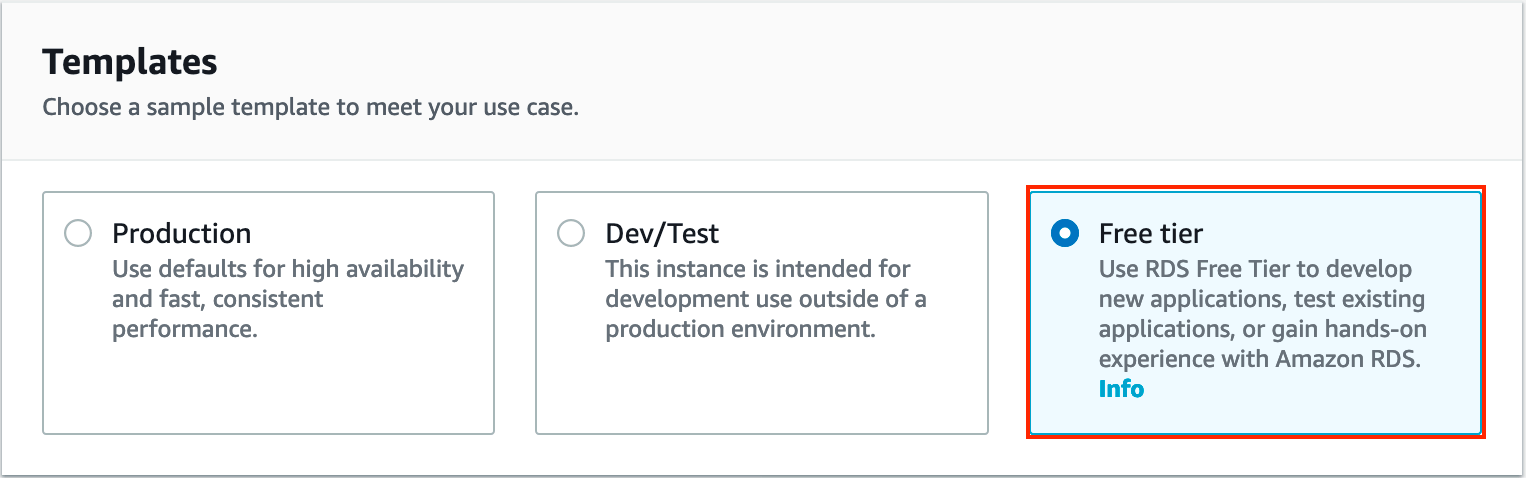

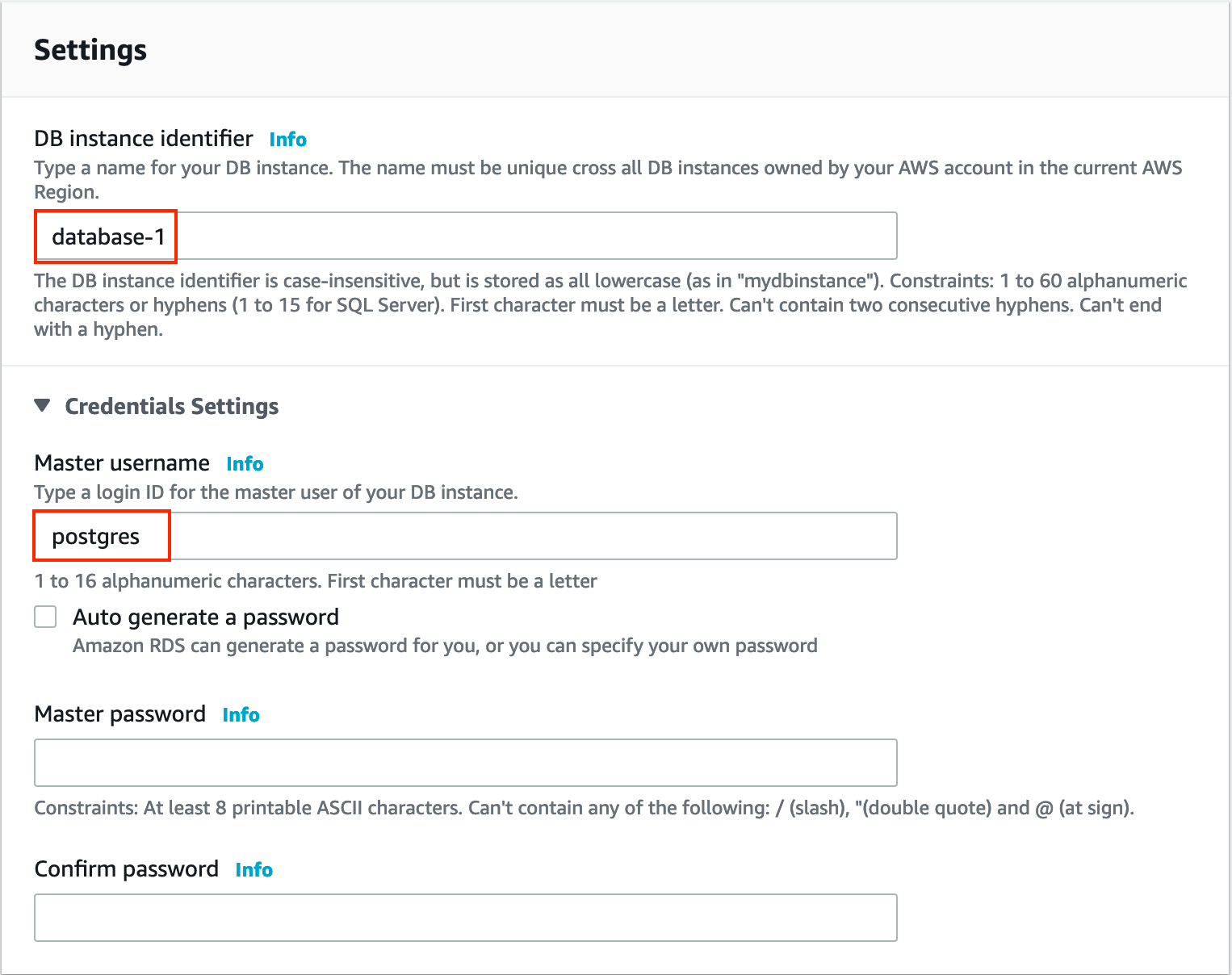

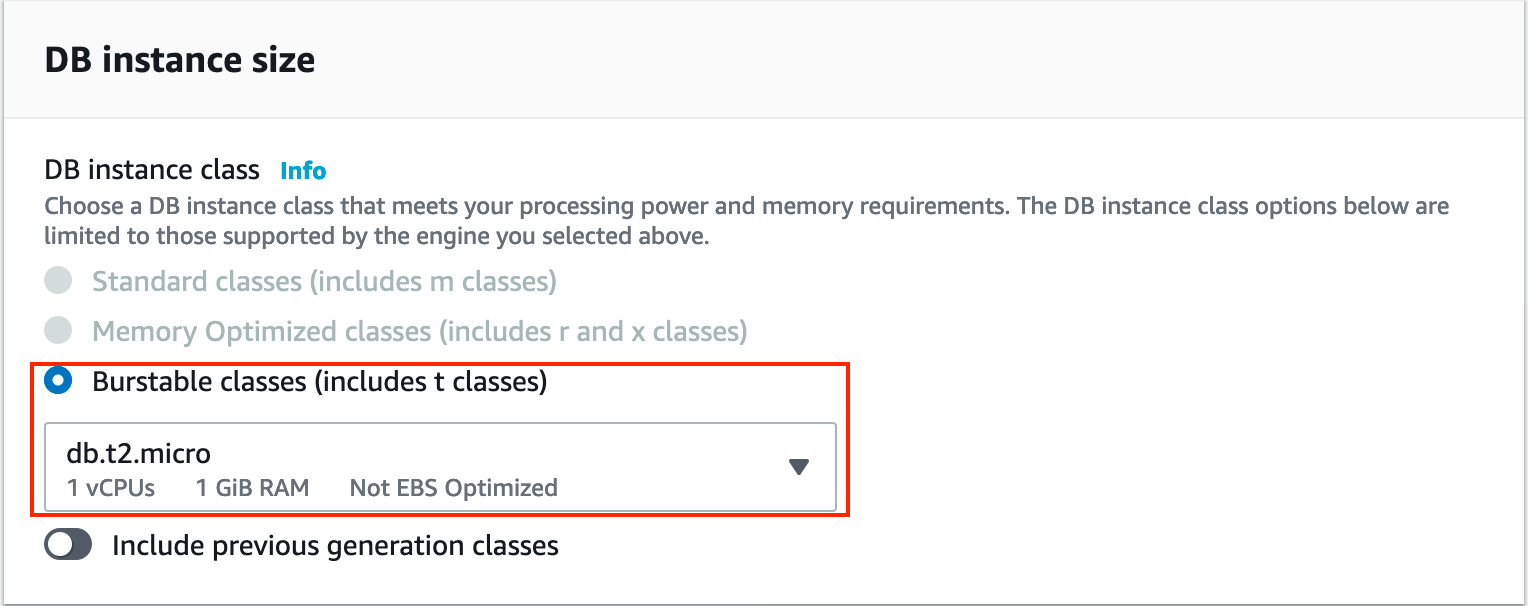

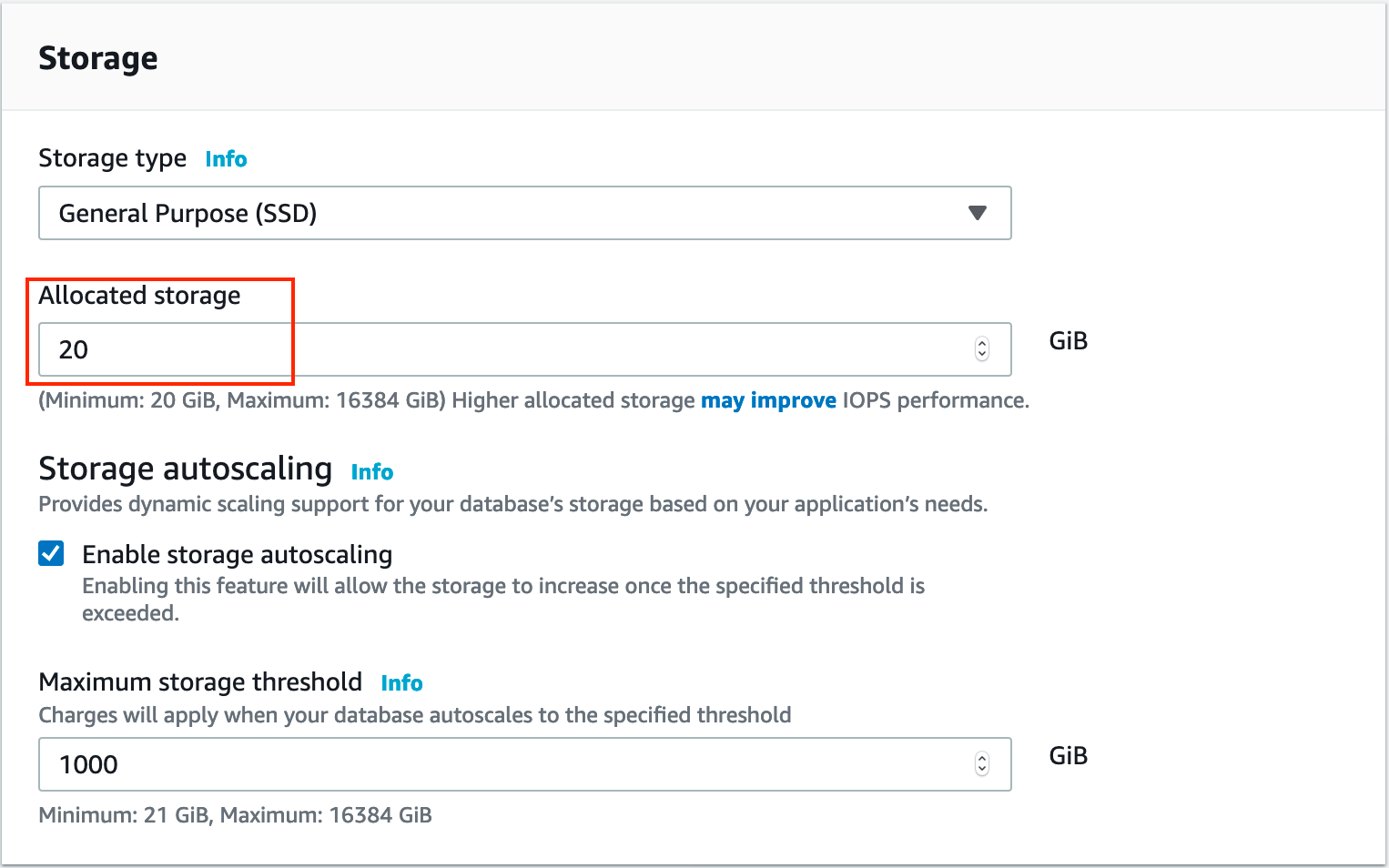

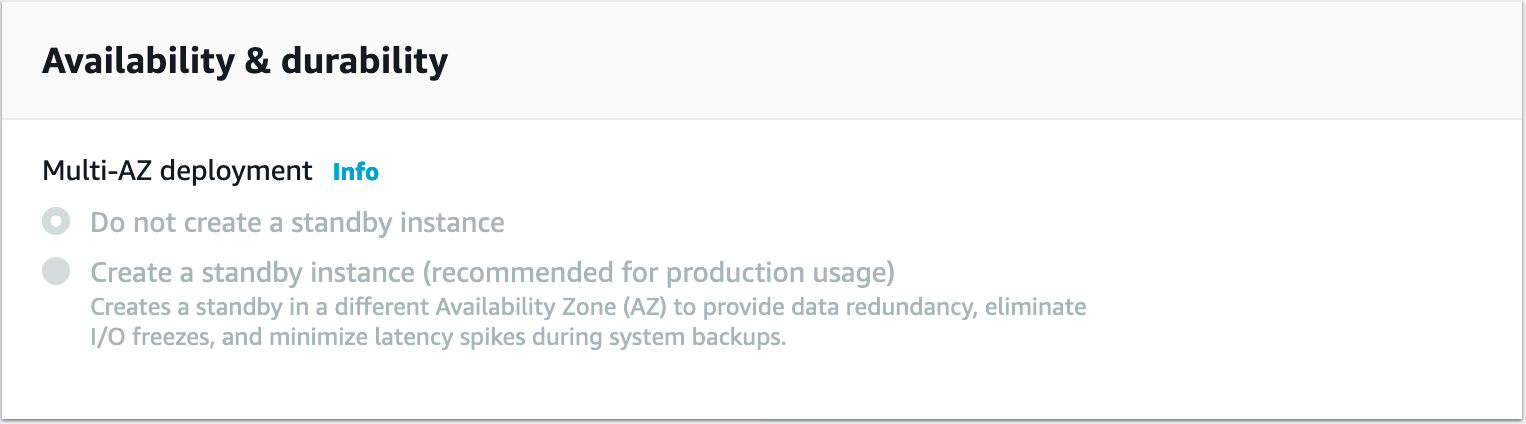

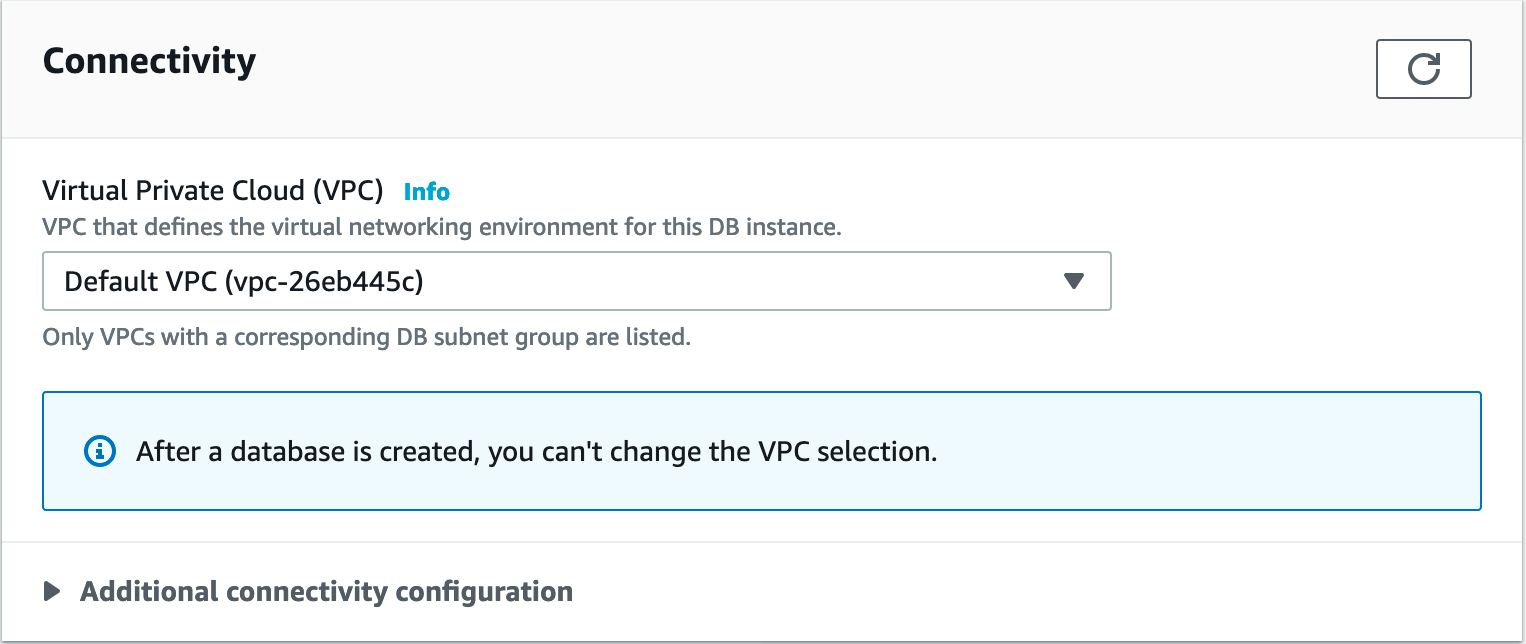

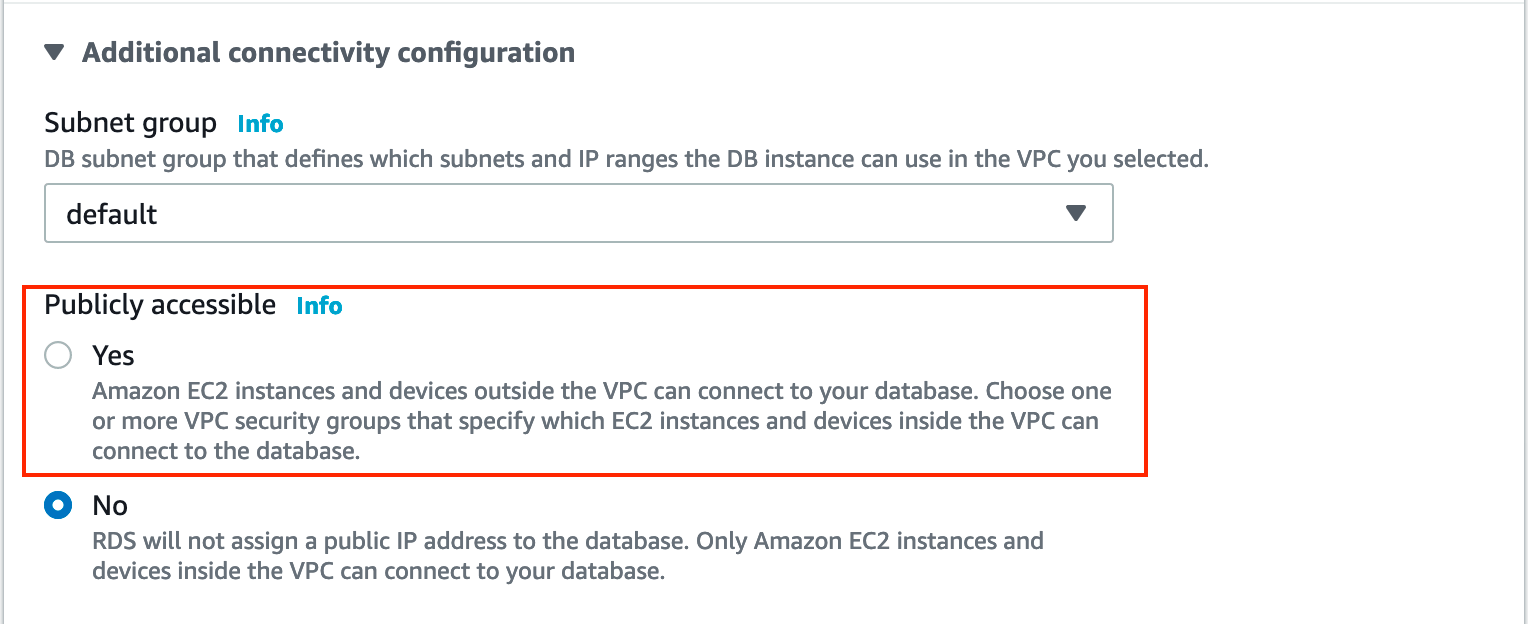

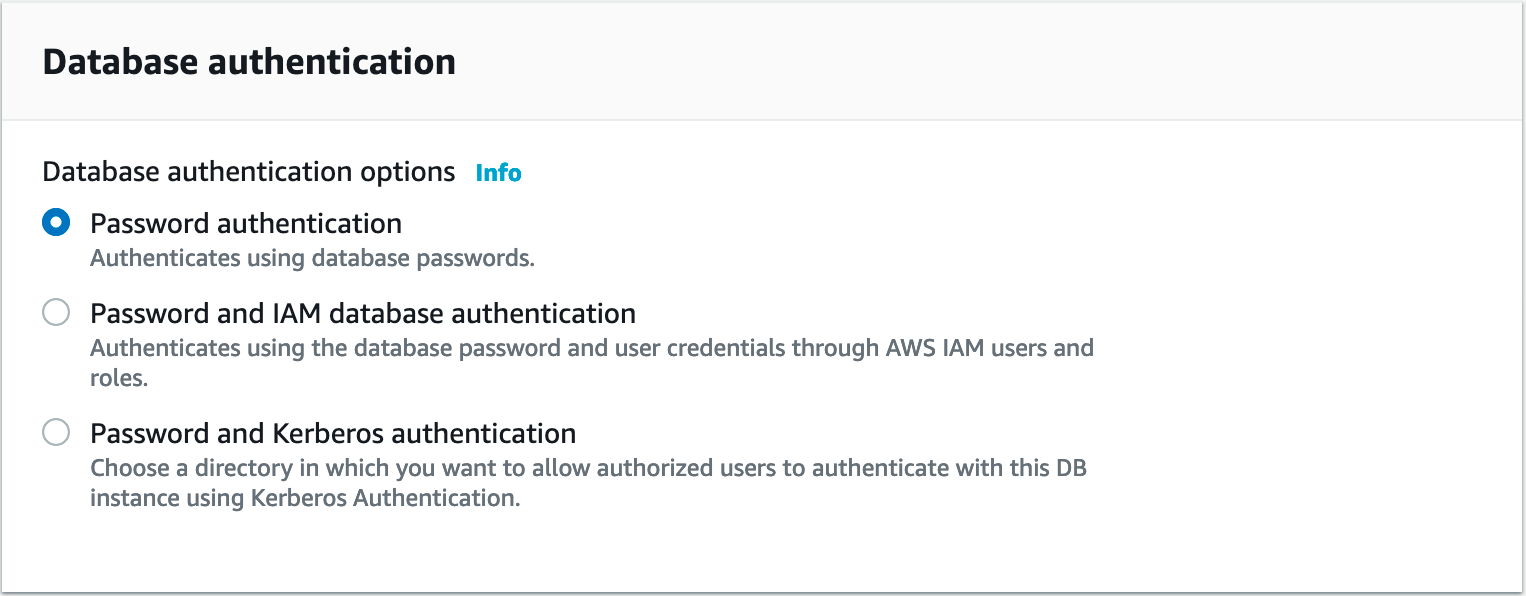

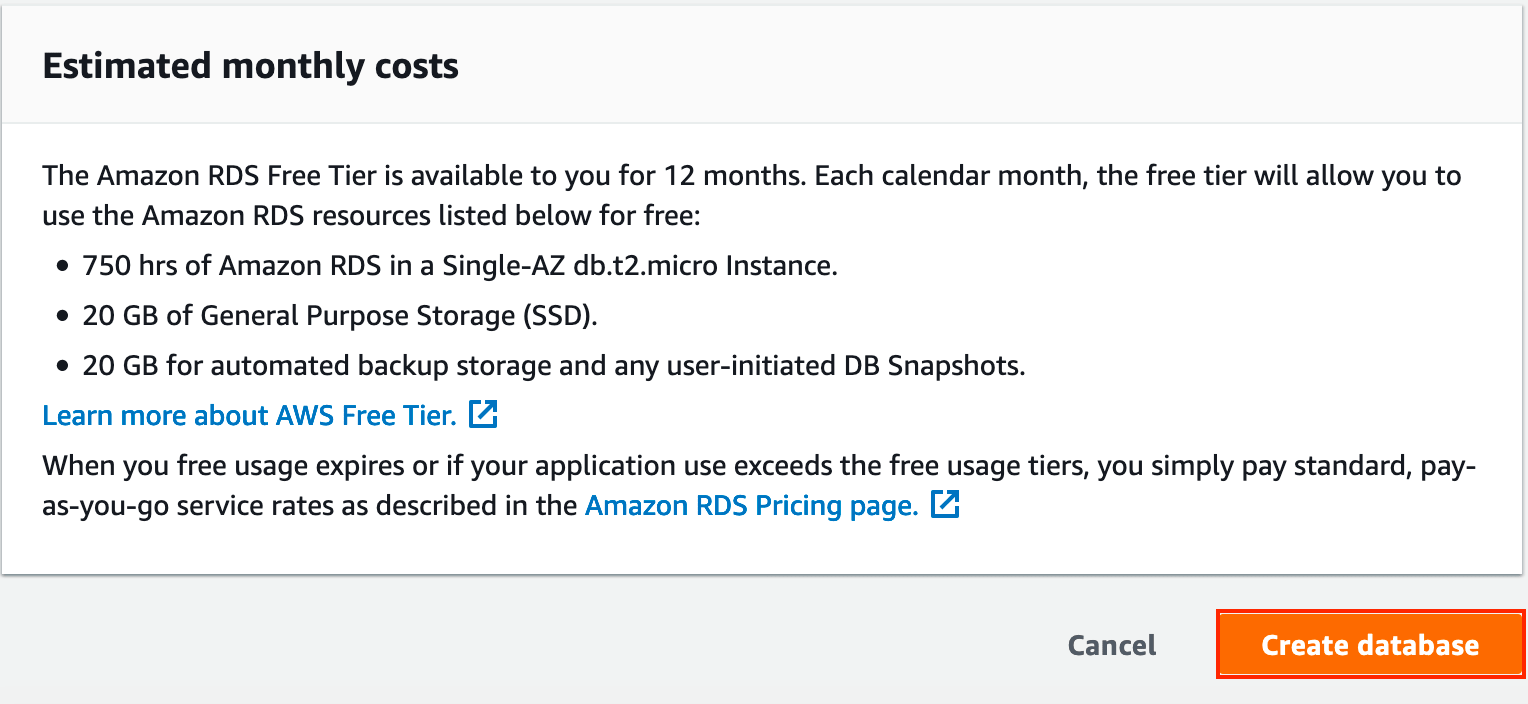

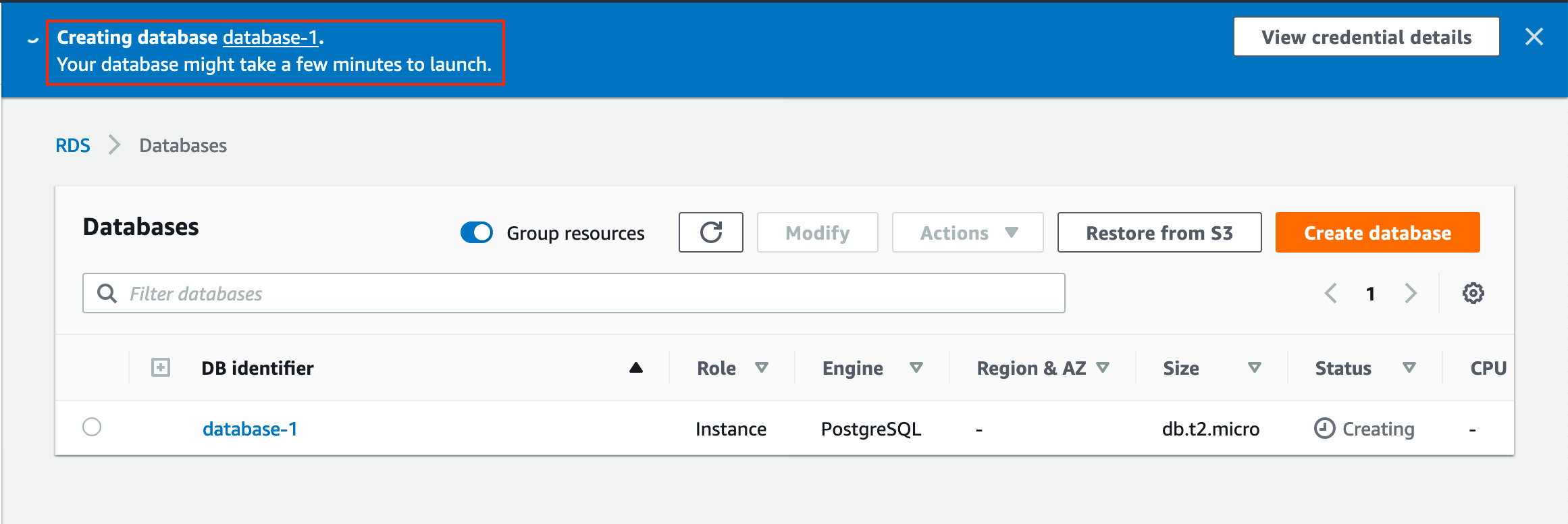

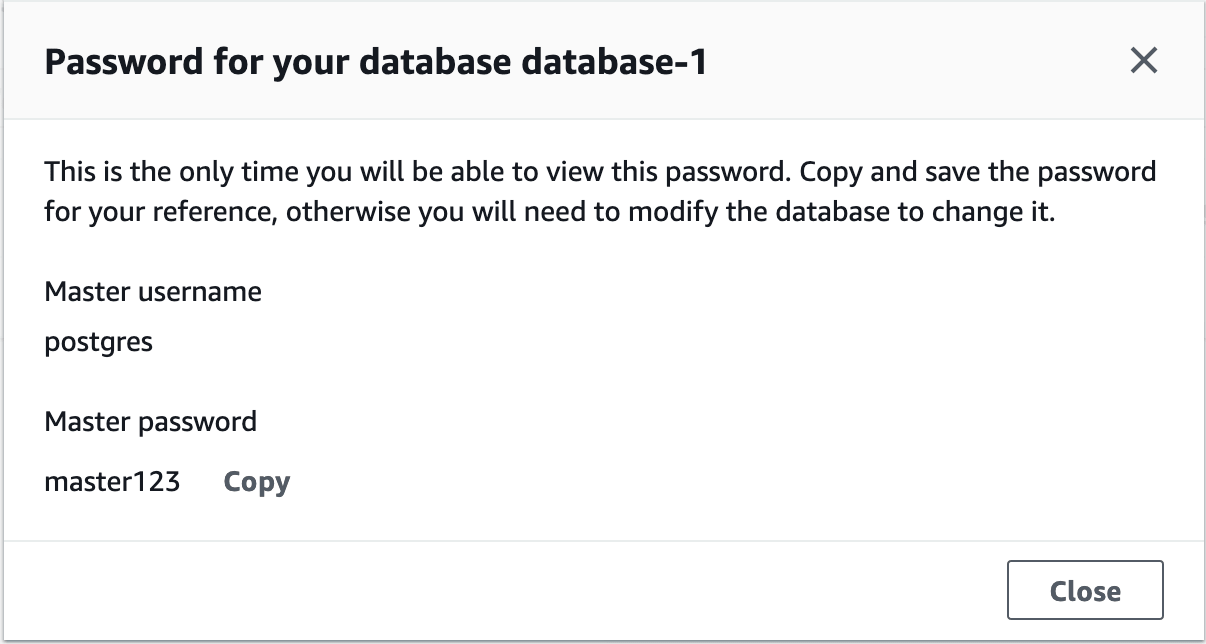

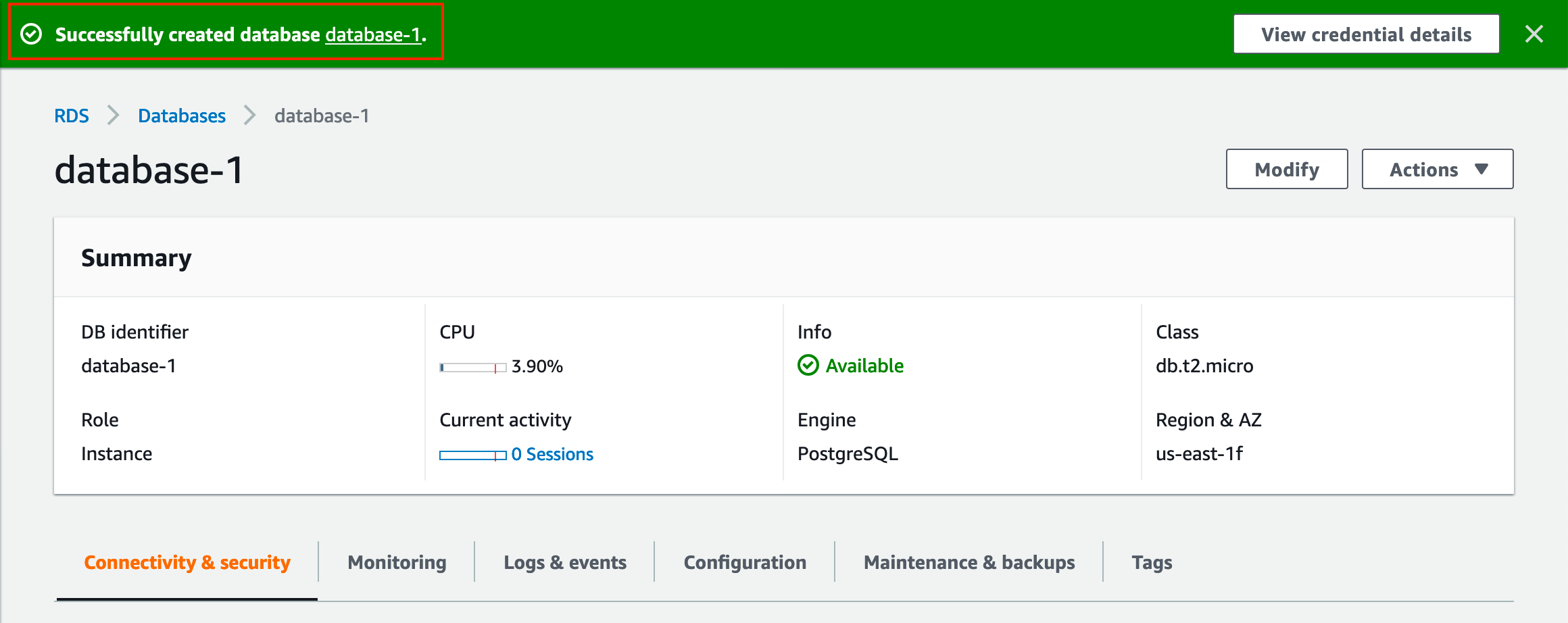

How to create AWS RDS PostgreSQL Database

How to create AWS rds PostgreSQL Database In this

Convert pem to ppk

Convert pem to ppk In this article, we will see

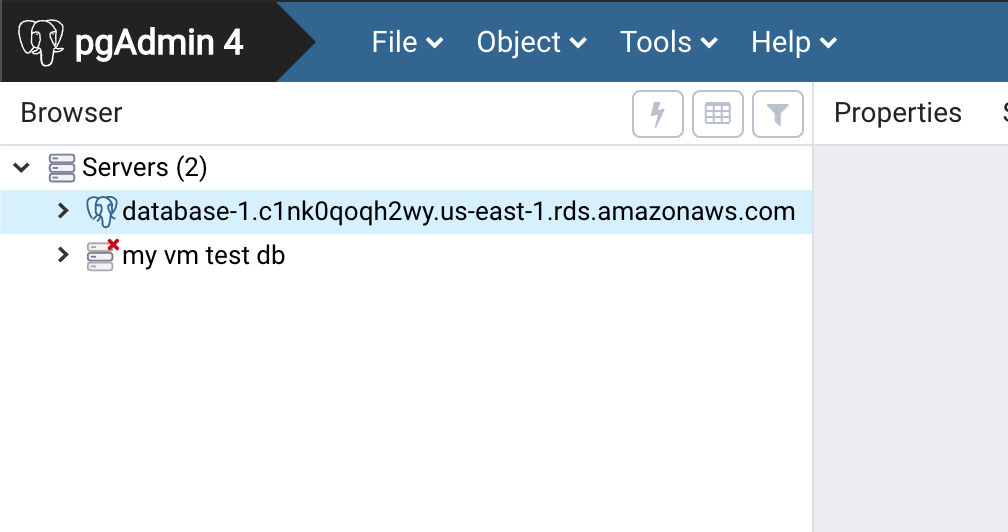

#3. Enter the Host Name/IP or AWS RDS endpoint name.

#3. Enter the Host Name/IP or AWS RDS endpoint name.

#4. Once you have added it successfully. Open and try to access the remote database.

#4. Once you have added it successfully. Open and try to access the remote database.

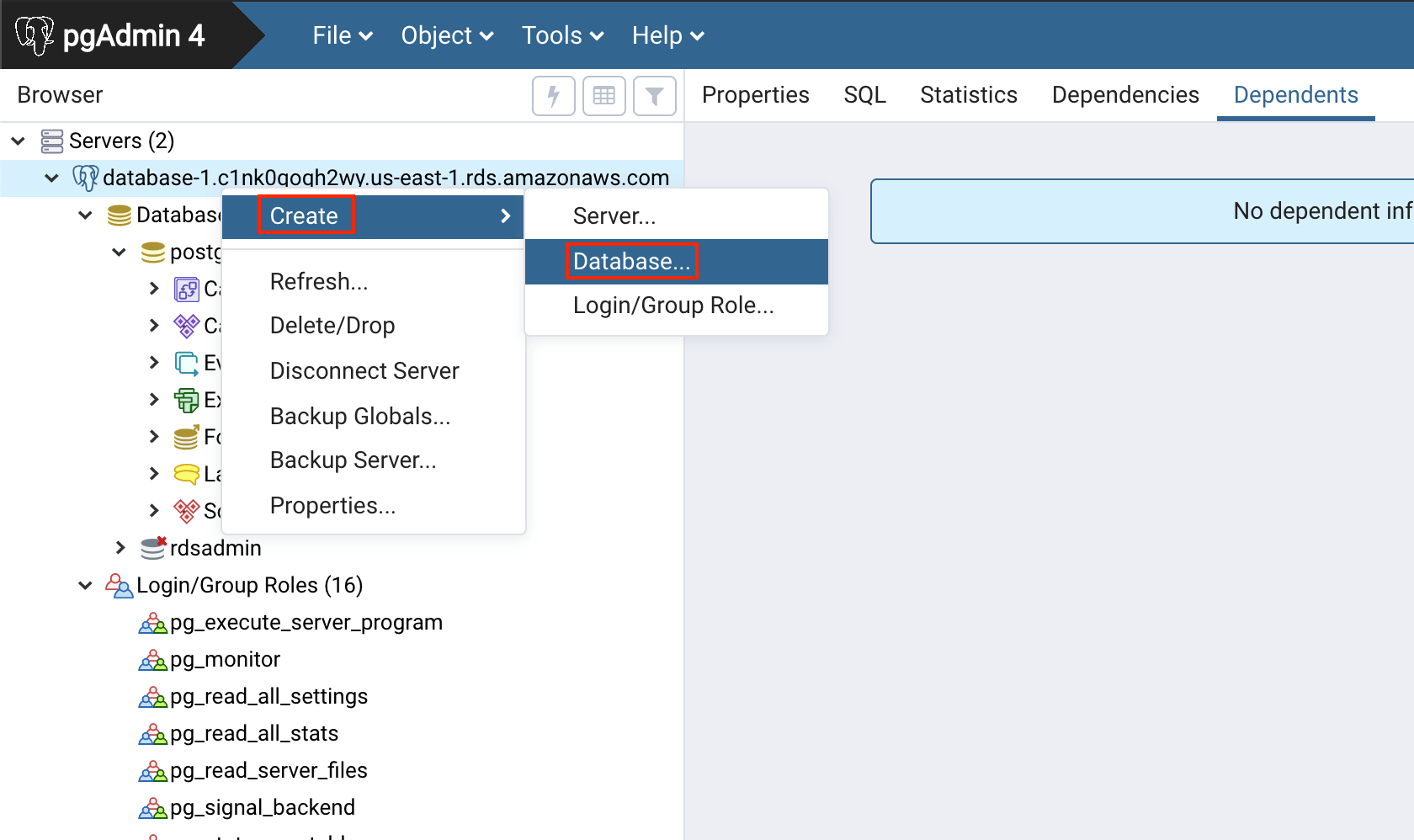

#5. Create a database for testing

#5. Create a database for testing

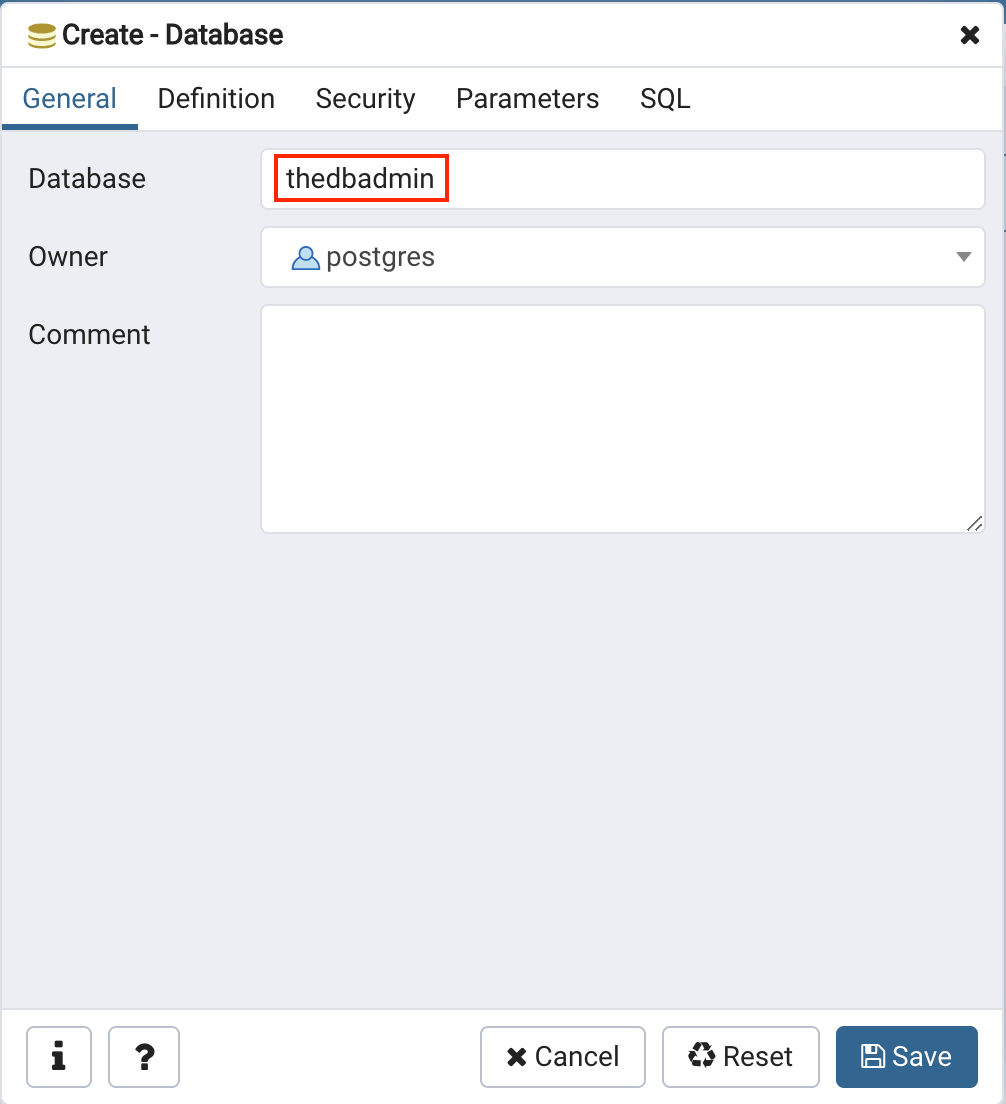

#6. Give database name and click on ‘save‘.

#6. Give database name and click on ‘save‘.

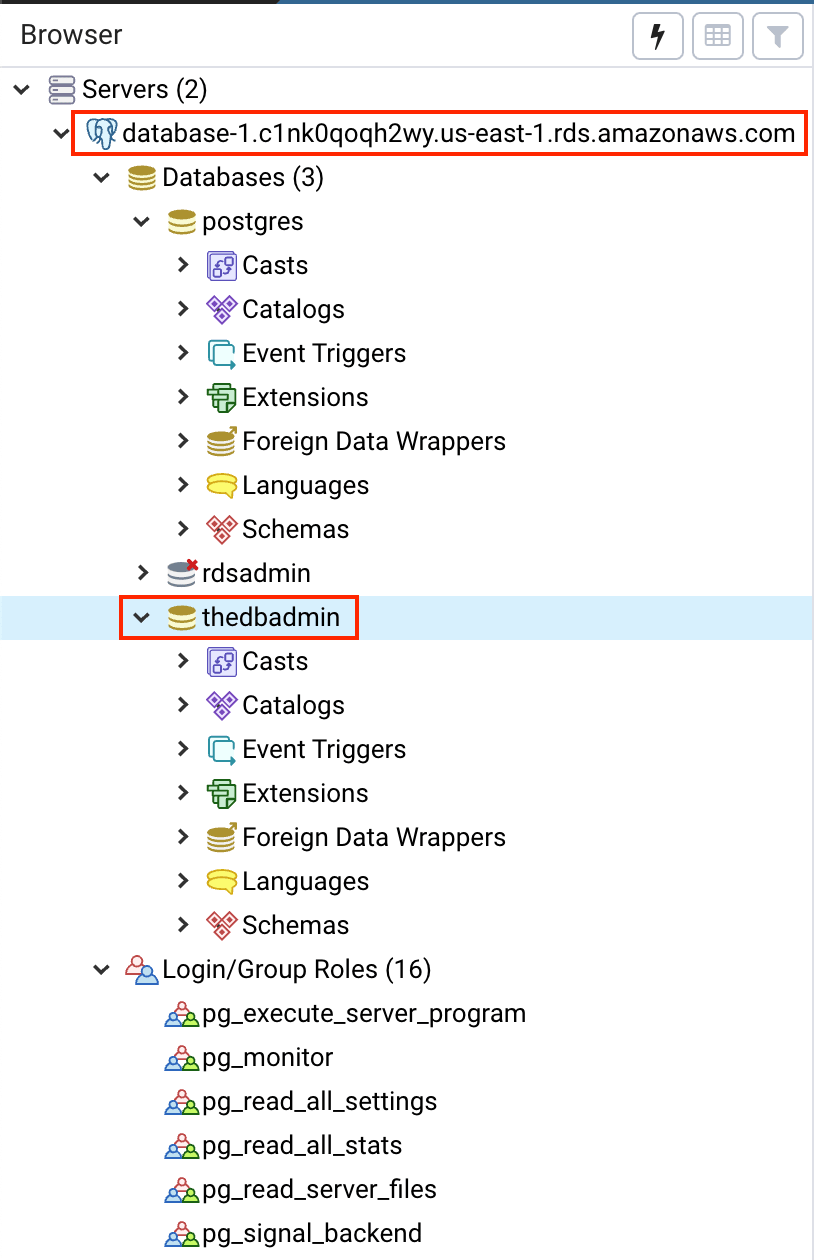

#7. Once created successfully it will ve visible under your connection name.

#7. Once created successfully it will ve visible under your connection name.

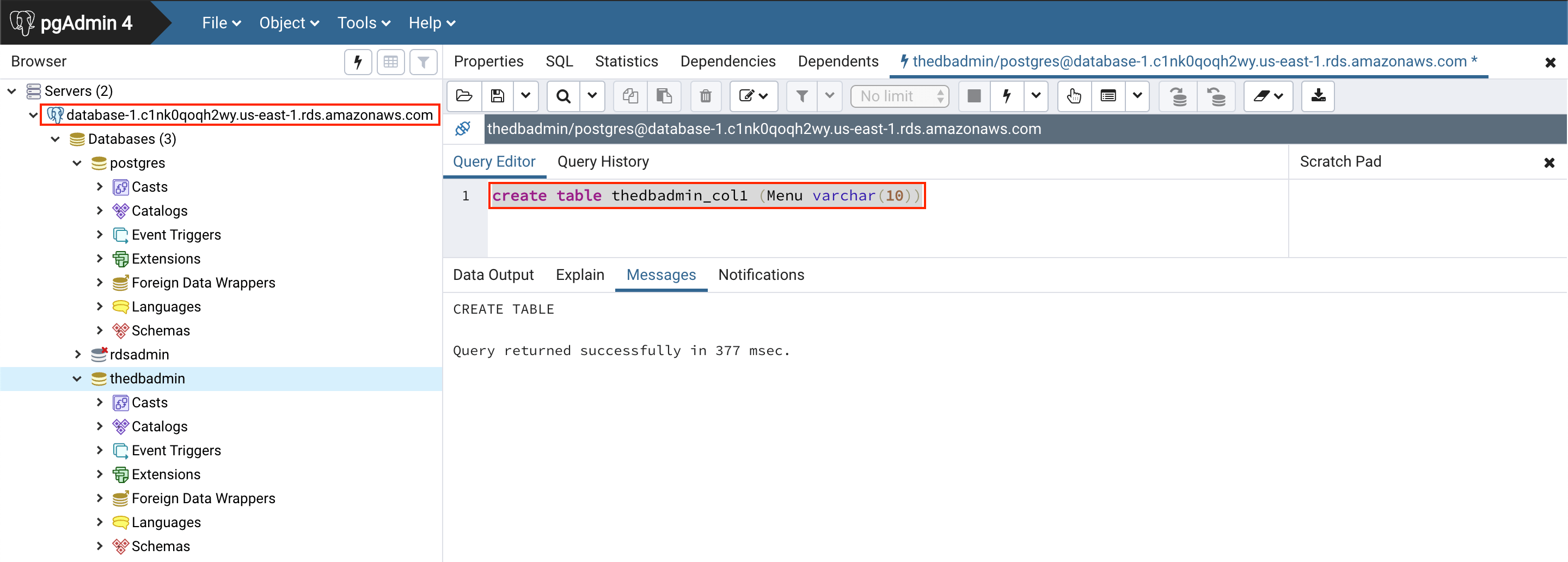

#8. Create a table under the newly created database.

#8. Create a table under the newly created database.

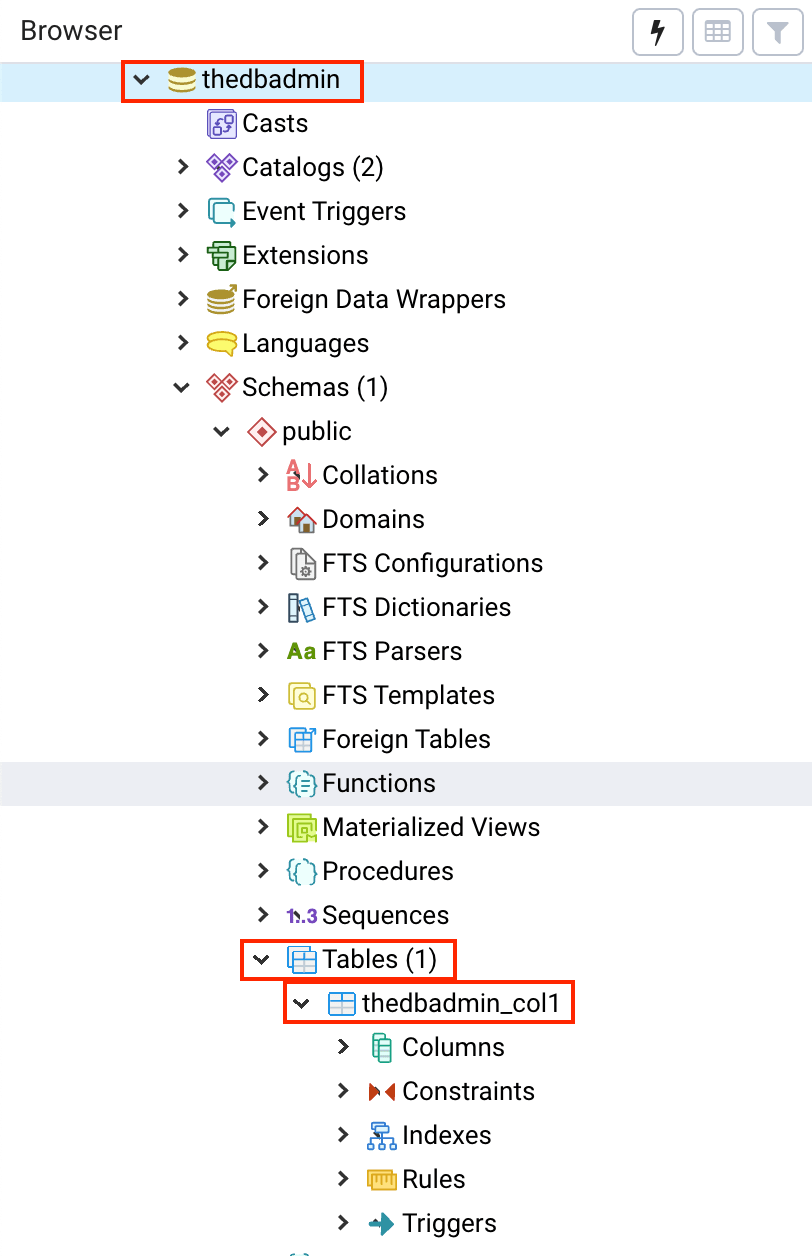

#9. You can see the newly created table under the database ‘thedbadmin’.

#9. You can see the newly created table under the database ‘thedbadmin’.

I hope you like this article.

Please comment if you have any doubt. Like our

I hope you like this article.

Please comment if you have any doubt. Like our